Optimizing Go Microservices for Low Latency & High Throughput

Introduction

Go (Golang) has become a popular choice for building microservices due to its excellent concurrency model, efficient memory management, and compiled nature. However, achieving optimal performance in terms of both latency and throughput requires careful consideration of architecture, coding patterns, and system-level optimizations. This article explores comprehensive strategies to optimize Go microservices for peak performance.

Understanding Latency and Throughput

Before diving into optimizations, it’s essential to understand what we’re optimizing for:

- Latency: The time taken to process a single request (measured in ms or μs)

- Throughput: The number of requests that can be processed in a given time period (measured in requests per second)

These metrics often have a complex relationship - optimizing for one may sometimes negatively impact the other. Our goal is to find the optimal balance for specific use cases.

Core Go Optimizations

1. Leverage Go’s Concurrency Model

Go’s goroutines and channels provide a powerful model for concurrent programming with minimal overhead.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

// Bad: Sequential processing

func ProcessRequests(requests []Request) []Response {

responses := make([]Response, len(requests))

for i, req := range requests {

responses[i] = processRequest(req)

}

return responses

}

// Good: Concurrent processing with goroutines

func ProcessRequestsConcurrently(requests []Request) []Response {

responses := make([]Response, len(requests))

var wg sync.WaitGroup

for i, req := range requests {

wg.Add(1)

go func(i int, req Request) {

defer wg.Done()

responses[i] = processRequest(req)

}(i, req)

}

wg.Wait()

return responses

}

|

2. Worker Pool Pattern

For handling many requests, implement a worker pool to limit concurrency and avoid resource exhaustion:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

func WorkerPool(tasks []Task, numWorkers int) []Result {

results := make([]Result, len(tasks))

jobs := make(chan int, len(tasks))

var wg sync.WaitGroup

// Start workers

for w := 0; w < numWorkers; w++ {

wg.Add(1)

go worker(w, tasks, results, jobs, &wg)

}

// Send jobs to workers

for j := range tasks {

jobs <- j

}

close(jobs)

// Wait for all workers to finish

wg.Wait()

return results

}

func worker(id int, tasks []Task, results []Result, jobs <-chan int, wg *sync.WaitGroup) {

defer wg.Done()

for j := range jobs {

results[j] = executeTask(tasks[j])

}

}

|

1. Using Redis as a Cache

Redis, as a high-performance key-value store, can significantly enhance the performance of your Go microservices.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

type RedisCache struct {

client *redis.Client

expiration time.Duration

}

func NewRedisCache(addr string, expiration time.Duration) *RedisCache {

client := redis.NewClient(&redis.Options{

Addr: addr,

Password: "", // Redis password (if any)

DB: 0, // Database to use

PoolSize: 100, // Connection pool size

})

return &RedisCache{

client: client,

expiration: expiration,

}

}

func (c *RedisCache) Get(key string, value interface{}) error {

data, err := c.client.Get(context.Background(), key).Bytes()

if err != nil {

return err

}

return json.Unmarshal(data, value)

}

func (c *RedisCache) Set(key string, value interface{}) error {

data, err := json.Marshal(value)

if err != nil {

return err

}

return c.client.Set(context.Background(), key, data, c.expiration).Err()

}

|

2. Implementing Rate Limiting with Redis

Redis-based rate limiting to protect your microservices from overload:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

func NewRedisRateLimiter(redisClient *redis.Client, limit int, window time.Duration) *RedisRateLimiter {

return &RedisRateLimiter{

client: redisClient,

limit: limit,

window: window,

}

}

func (l *RedisRateLimiter) Allow(key string) (bool, error) {

now := time.Now().UnixNano()

windowStart := now - l.window.Nanoseconds()

pipe := l.client.Pipeline()

// Remove requests outside the window

pipe.ZRemRangeByScore(context.Background(), key, "0", strconv.FormatInt(windowStart, 10))

// Get the number of requests in the current window

countCmd := pipe.ZCard(context.Background(), key)

// Add the new request

pipe.ZAdd(context.Background(), key, &redis.Z{Score: float64(now), Member: now})

// Set expiration on the key

pipe.Expire(context.Background(), key, l.window)

_, err := pipe.Exec(context.Background())

if err != nil {

return false, err

}

count := countCmd.Val()

return count <= int64(l.limit), nil

}

|

3. Distributed Locking with Redis

Distributed locking mechanism using Redis to coordinate between microservices:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

type RedisLock struct {

client *redis.Client

key string

value string

expiration time.Duration

}

func NewRedisLock(client *redis.Client, resource string, expiration time.Duration) *RedisLock {

return &RedisLock{

client: client,

key: fmt.Sprintf("lock:%s", resource),

value: uuid.New().String(),

expiration: expiration,

}

}

func (l *RedisLock) Acquire() (bool, error) {

return l.client.SetNX(context.Background(), l.key, l.value, l.expiration).Result()

}

func (l *RedisLock) Release() error {

script := redis.NewScript(`

if redis.call("GET", KEYS[1]) == ARGV[1] then

return redis.call("DEL", KEYS[1])

else

return 0

end

`)

_, err := script.Run(context.Background(), l.client, []string{l.key}, l.value).Result()

return err

}

|

4. Advanced Caching Strategies with Redis

Implementing efficient and complex caching strategies using Redis’s built-in data structures:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

|

type MultiLevelCache struct {

local *ristretto.Cache // Local memory cache (Ristretto)

redis *redis.Client // Redis cache

localTTL time.Duration

redisTTL time.Duration

}

func NewMultiLevelCache(redisAddr string) (*MultiLevelCache, error) {

// Local cache configuration

localCache, err := ristretto.NewCache(&ristretto.Config{

NumCounters: 1e7, // Track about 10M items

MaxCost: 1 << 30, // Use up to 1GB

BufferItems: 64, // Default value

})

if err != nil {

return nil, err

}

// Redis client

redisClient := redis.NewClient(&redis.Options{

Addr: redisAddr,

PoolSize: 100,

})

return &MultiLevelCache{

local: localCache,

redis: redisClient,

localTTL: 1 * time.Minute, // Local cache duration

redisTTL: 10 * time.Minute, // Redis cache duration

}, nil

}

func (c *MultiLevelCache) Get(key string, value interface{}) (bool, error) {

// First check local cache

if val, found := c.local.Get(key); found {

err := json.Unmarshal(val.([]byte), value)

return true, err

}

// If not found in local cache, check Redis

val, err := c.redis.Get(context.Background(), key).Bytes()

if err == nil {

// Found in Redis, add to local cache too

err = json.Unmarshal(val, value)

if err == nil {

c.local.SetWithTTL(key, val, 1, c.localTTL)

}

return true, err

} else if err != redis.Nil {

// Redis error

return false, err

}

// Not found anywhere

return false, nil

}

func (c *MultiLevelCache) Set(key string, value interface{}) error {

// Convert to JSON

data, err := json.Marshal(value)

if err != nil {

return err

}

// Save to Redis first

err = c.redis.Set(context.Background(), key, data, c.redisTTL).Err()

if err != nil {

return err

}

// Then add to local cache

c.local.SetWithTTL(key, data, 1, c.localTTL)

return nil

}

func (c *MultiLevelCache) Delete(key string) error {

// Delete from Redis first

err := c.redis.Del(context.Background(), key).Err()

// Also delete from local cache

c.local.Del(key)

return err

}

|

5. Inter-Microservice Communication with Redis Pub/Sub

Redis’s Pub/Sub feature provides a lightweight and fast communication mechanism between Go microservices:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

|

type RedisPubSub struct {

client *redis.Client

}

func NewRedisPubSub(addr string) *RedisPubSub {

client := redis.NewClient(&redis.Options{

Addr: addr,

PoolSize: 100,

})

return &RedisPubSub{

client: client,

}

}

func (ps *RedisPubSub) Publish(channel string, message interface{}) error {

data, err := json.Marshal(message)

if err != nil {

return err

}

return ps.client.Publish(context.Background(), channel, data).Err()

}

func (ps *RedisPubSub) Subscribe(channel string, handler func([]byte)) error {

pubsub := ps.client.Subscribe(context.Background(), channel)

defer pubsub.Close()

// Start a goroutine to process messages

ch := pubsub.Channel()

for msg := range ch {

handler([]byte(msg.Payload))

}

return nil

}

// Usage example:

func StartSubscriber(ps *RedisPubSub) {

go func() {

err := ps.Subscribe("orders", func(data []byte) {

var order Order

if err := json.Unmarshal(data, &order); err == nil {

processOrder(order)

}

})

if err != nil {

log.Fatalf("Subscribe error: %v", err)

}

}()

}

|

Memory Optimization Techniques

1. Object Pooling

Reuse objects to reduce garbage collection pressure:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

var bufferPool = sync.Pool{

New: func() interface{} {

return new(bytes.Buffer)

},

}

func ProcessWithPool() {

buf := bufferPool.Get().(*bytes.Buffer)

defer func() {

buf.Reset()

bufferPool.Put(buf)

}()

// Use buf for processing...

}

|

2. Reducing Memory Allocations

Minimize garbage collection overhead by reducing unnecessary allocations:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

// Bad: Creates a new slice on each call

func BadAppend(data []int, value int) []int {

return append(data, value)

}

// Good: Pre-allocates the slice with capacity

func GoodAppend(data []int, values ...int) []int {

if cap(data) < len(data)+len(values) {

newData := make([]int, len(data), len(data)+len(values)+100) // Extra capacity

copy(newData, data)

data = newData

}

return append(data, values...)

}

|

Redis and Caching Strategy Comparison

Network Optimization

1. Connection Pooling

Reuse connections to reduce the overhead of establishing new ones:

1

2

3

4

5

6

7

8

|

var httpClient = &http.Client{

Transport: &http.Transport{

MaxIdleConns: 100,

MaxIdleConnsPerHost: 100,

IdleConnTimeout: 90 * time.Second,

},

Timeout: 10 * time.Second,

}

|

2. Using HTTP/2 and gRPC

HTTP/2 and gRPC offer significant performance advantages:

- Multiplexing multiple requests over a single connection

- Header compression

- Binary protocol efficiency

1

2

3

4

5

6

7

8

9

|

func NewGRPCServer() *grpc.Server {

return grpc.NewServer(

grpc.KeepaliveParams(keepalive.ServerParameters{

MaxConnectionIdle: 5 * time.Minute,

Time: 20 * time.Second,

Timeout: 1 * time.Second,

}),

)

}

|

Database Optimizations

1. Connection Pooling

1

2

3

4

5

6

7

8

9

|

db, err := sql.Open("postgres", connectionString)

if err != nil {

log.Fatal(err)

}

// Configure connection pool parameters

db.SetMaxOpenConns(25)

db.SetMaxIdleConns(25)

db.SetConnMaxLifetime(5 * time.Minute)

|

2. Batch Processing

Reduce round trips to the database:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

// Insert multiple records in a single query

func BatchInsert(users []User) error {

query := "INSERT INTO users(id, name, email) VALUES "

vals := []interface{}{}

for i, user := range users {

query += fmt.Sprintf("($%d, $%d, $%d),", i*3+1, i*3+2, i*3+3)

vals = append(vals, user.ID, user.Name, user.Email)

}

query = query[:len(query)-1] // Remove the trailing comma

_, err := db.Exec(query, vals...)

return err

}

|

Microservice Architecture with Redis

System Level Optimizations

1. CPU Profiling and Optimization

Identify bottlenecks using Go’s built-in profiling tools:

1

|

go tool pprof http://localhost:6060/debug/pprof/profile

|

2. Tuning Operating System Parameters

Adjust system settings for network-intensive applications:

1

|

sysctl -w net.core.somaxconn=65535

|

Service Mesh and Load Balancing

Implement intelligent request routing and load balancing:

Monitoring and Observability

Implement comprehensive telemetry to identify bottlenecks:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

func instrumentHandler(next http.Handler) http.Handler {

return http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

start := time.Now()

// Wrap ResponseWriter to capture status code

ww := middleware.NewWrapResponseWriter(w, r.ProtoMajor)

// Execute the handler

next.ServeHTTP(ww, r)

// Record metrics

duration := time.Since(start).Milliseconds()

requestsTotal.WithLabelValues(r.Method, r.URL.Path, strconv.Itoa(ww.Status())).Inc()

requestDuration.WithLabelValues(r.Method, r.URL.Path).Observe(float64(duration))

})

}

|

Consistently test service performance under various loads:

1

2

3

4

5

6

7

8

9

10

11

12

13

|

func BenchmarkEndpoint(b *testing.B) {

server := httptest.NewServer(NewAPIHandler())

defer server.Close()

b.ResetTimer()

for i := 0; i < b.N; i++ {

resp, err := http.Get(server.URL + "/api/resource")

if err != nil {

b.Fatal(err)

}

resp.Body.Close()

}

}

|

Below is a visualization of the impact of various optimizations on a typical Go microservice:

Redis Distributed System Architecture

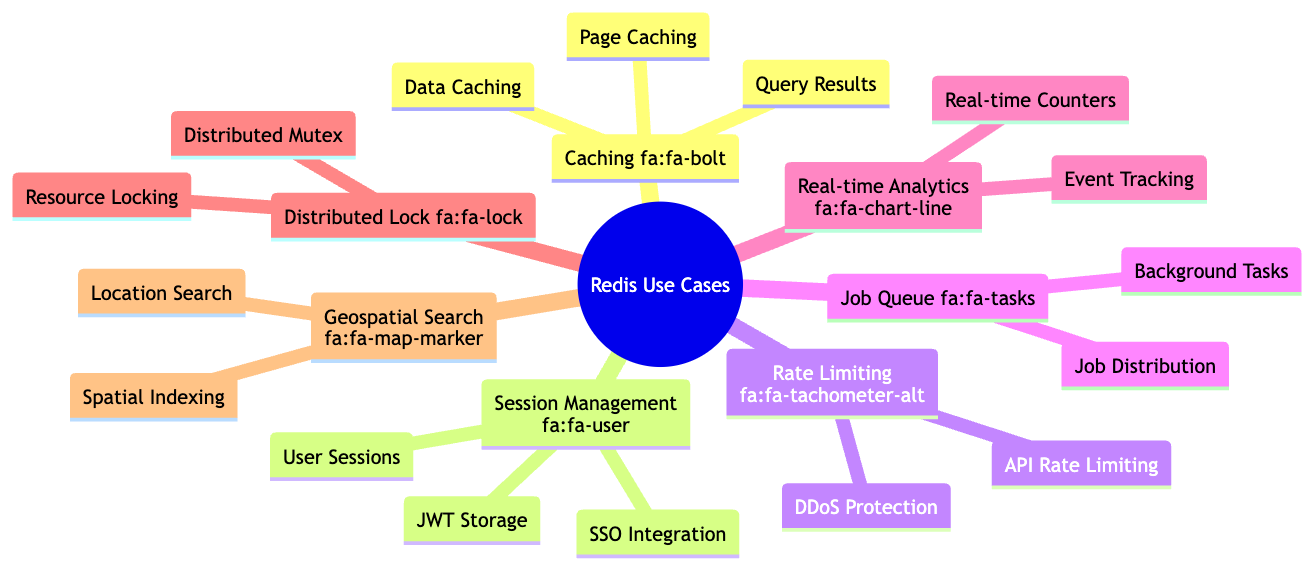

Redis Use Cases

Conclusion

Optimizing Go microservices for low latency and high throughput requires a multi-faceted approach. Redis emerges as a critical component in these optimization strategies:

- Leverage Go’s concurrency model with goroutines and channels

- Implement efficient memory management with pooling

- Optimize network communications with connection reuse and modern protocols

- Implement multi-level caching strategies with Redis:

- Local memory cache (first line of defense)

- Redis cache (distributed, scalable second level)

- Cache invalidation mechanisms to ensure data consistency

Remember that Redis can be used not just for caching, but also for:

- Rate limiting

- Session management

- Distributed locking

- Inter-microservice communication (Pub/Sub)

- Job queuing

Use appropriate database access patterns

Continuously monitor and performance test your services

The most effective optimization strategy requires combining these techniques according to your specific workload characteristics and bottlenecks. Avoid premature optimizations that can lead to unnecessary complexity – always measure performance before and after changes to ensure you’re making real improvements.

When integrating Redis into your microservice architecture, consider the following factors:

- Caching Strategy: What data to cache, for how long, and how to invalidate it

- Memory Management: Carefully configure Redis memory usage and eviction policies

- Scalability: Use Redis Sentinel or Redis Cluster for high availability and scalability

- Durability: Configure AOF and RDB settings for data persistence requirements

By implementing these strategies, you can develop Go microservices that handle high loads with minimal latency, are scalable, and deliver exceptional performance. Strategic use of Redis can dramatically reduce latency times and significantly enhance the scalability of your services.